We like YML, YML was always readable, like python code. So we went ahead and build a library to do it using some open source components from Web Scraper Extension and Parsel from Scrapy. We like the interface of Web scraper io, but it was limited in its data parsing capabilities at least for our use cases. We couldn’t export what we wrote and put it back inside Web scraper Extension, edit the selector and paste it back. Once you generate something, and if something changes in the website - you need to start from scratch again, all the custom stuff that we wrote for parsing was now gone.

#WEBSCRAPER IO CODE#

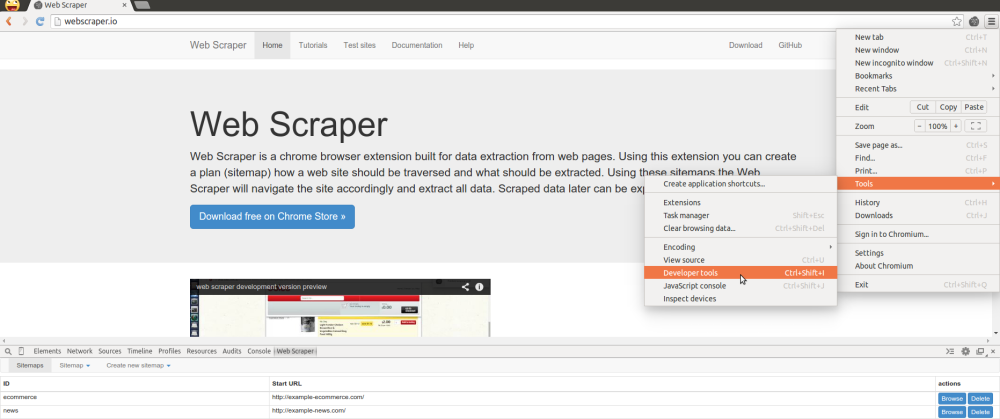

This solved the first problem, but we still had the second.Īnother problem that came up was that the developer never really liked the code that we generated. It worked for a few Scrapers, but our team was very hesitant to adopt it, as they had to generate the code first, and then there was a lot of parsing required to get it in a format that we needed. So we went ahead with WebScraper.io’s chrome extension, marked up webpages and built functional scrapers with it, and wrote a tool to convert those “Sitemaps” into real scraper code, in python that we could run in our cloud, for our customers. Well, we already had a pretty good web crawling cloud we had built over the years and perfected to crawl about 3000 pages second ( we don’t usually do that, and be nice to websites). There were more tools, but all of those required us to use their cloud platform.

It’s during that time we looked at some of the open source Visual Web Scraping tools like Portia for Scrapy and Web Scraper Extension for Chrome (was open source back then). This takes almost another two hours.Īll this for a relatively small scraper. It usually takes a few for loops to get there, and may be a few if else conditions to get to that data. E.g.: Product -> Review -> Ratings -> Rating. These ratings presented in a text format similar to 4.7 stars/5 stars.

With each review having 5 types of ratings. Assume that we are dealing with some product reviews of a particular product. This took about an hour to find, test and set. Find the XPATHs / CSSSelectors for say 30 data points on a page.Some might be in a nested format with parent element and child elements grouped together in different combinations, and we needed to format each of those fields. Part of the frustration was that there were quite a lot of data points to grab from the website, for each row of data. The data needed was right there in the HTML, and not a lot of transformation was required. These were relatively less complicated websites. At Scrapehero, we build about a hundred spiders a week, for many websites, and about 60% of them are new websites, the ones that we have not heard about. Selectorlib was built out of frustration.

#WEBSCRAPER IO INSTALL#

A python library that reads this YAML file, and extracts the data you marked up on the page.ĭownload Chrome Extension Install Python Package Why was it built A chrome extension that lets you markup data on websites and export a YAML file with it. If ( await webScraper.Selectorlib is combination of two packages.

0 kommentar(er)

0 kommentar(er)